What is functional testing?

Generally, functional testing means ensuring that each function of software meets the specified requirements. Given some input, a function meets its requirements when the behavior and output of a software application matches its expectations, usually set prior to testing.

Below we are listing several commonly used functional testing types. However, before going into those, it is important to note that most functional testing methodologies require having technical specification documents. Such a document outlines the desired functionality of a product, its use cases, and, sometimes, user flows.

These documents are key because not only do they help build a product, but they also allow for efficient and purposeful testing. As they say, ‘You will never be able to hit a target that you cannot see.’

What is non-functional testing?

Non-functional testing is just what it sounds like - a software testing process that checks all its non-functional aspects. Basically, everything that is not tested in the functional testing is verified in a non-functional one.

For example, one of the non-functional tests could be how many users can use login or use a system simultaneously.

We are going to be listing several types within non-functional testing that can help form a better understanding of which non-functional aspects can be tested in the first place, and how to go about it.

Functional testing types

As mentioned above, functional testing is only a broad category of testing methodologies. Functional testing itself does not provide any specifics, apart from the common characteristic the following types share: they are all trying to verify the functionality of a software application.

The specifics for each type, though, vary significantly and are given below.

Unit testing

Unit testing is performed on smaller chunks of an application, i.e. units or components, whereby each component is tested separately, and potentially via multiple tests.

In general, unit testing is considered to be the low-level equivalent of testing, as it encompasses the smallest testable chunks of software possible.

Usually, unit testing is performed prior to integration testing (see below) and requires quite a lot of time to set up and complete.

For this methodology, general steps include:

- Test preparation

- Implementation

- Running the tests

If you would like a thorough description of the unit testing process, go over to Software Testing Fundamentals and give it a read.

Test preparation happens before any coding can be done, as well as in between iterations of testing. Running and implementation of the tests are usually done by an appropriate IDE and language-specific libraries.

It is doubtful that any of these steps are going to yield perfect outcomes from the first try. Usually, it takes several attempts, as well as the addition of test cases, to fully test an application. Similarly, after performing the tests, iterations of each step combined with fixing occurring errors are almost always guaranteed.

Regression testing

Regression testing is quite different from unit testing, in that it implies re-running functional tests throughout the development process. It is done so to verify that previously developed functionality still performs well after the addition of new code or modification of the old functions. Other situations when regression testing is needed include when there are performance issues or detected defects.

However, there is no need to re-run all of the existing tests. Sometimes, due to available resources and time constraints, a group of tests might have a higher priority than others. In which case, prioritized regression testing is done.

On the other hand, when some test cases are not relevant to the modifications made, those cases should not be performed yet again. This case is called selective regression testing.

Regression testing can be done manually or by using automation. If you are interested, this resource gives a good overview of utilizing regression testing and can help you decide whether you would like to automate it or not.

Smoke testing

Smoke testing is usually done preliminarily to other methodologies when there is a need to check whether the deployed build is stable or not. It is also sometimes referred to as “Build Verification Testing.”

Smoke test cases include only a subset of all tests and are very far from an exhaustive list. These cases cover the most important functionality, critical to the application.

The most simple example includes a test that checks whether an application runs in the first place. Another example would be a test that verifies that clicking the main elements of an application actually causes an action.

Sometimes developers use smoke tests to determine whether an application is ready to be tested further. It is commonly used within integration testing and user acceptance testing methodologies (see below).

Integration testing

If unit testing is the lowest level testing, integration testing comes next. This methodology takes several units together and tests them in combinations.

Integration testing is crucial in applications, which contain several components. It aims to test interfaces and communication between system components in order to verify the system as a whole. To avoid wasting time and to constructively test a complex system, it is important to fully test its individual parts, before determining how they work when connected together. Otherwise, bugs from different components are going to mix together and make developers frustrated. It is not an easy (or calming) exercise to go back and forth between components, fixing bugs on the way, to only discover new ones somewhere else.

User Acceptance Testing (UAT)

Sometimes UAT is referred to as beta or end-user testing and is defined broadly as a testing process that verifies that the software ‘works for the user.’

With the previously mentioned testing methodologies, developers are trying to determine whether there are defects in the functionality of the application, see if there are any points when the software crashes and if the technical requirements are met.

While these investigations are crucial, none of them would matter if the application did not do what the user expects or wants it to do. Naturally, UAT is performed after the software is fully or almost fully developed, as the last stage of testing.

To squeeze as much as you can from UAT, developers should test as much as they possibly can before beta testing. Even if they are confident in the quality of the software, user acceptance testing is crucial before rolling out a product, or releasing a new version of a product,

Non-functional testing types

Performance testing

Performance testing is one of the most commonly performed types of testing, and its goal is to determine the responsiveness, speed, and stability of a system or a software application. Often developers would run a system under several conditions with multitudes of loads to see how the system performs under these conditions.

More importantly, however, this type of testing helps identify communication and computing bottlenecks within the system. By locating these bottlenecks and addressing them, performance can be further increased. Performance testing can be then run repeatedly, after each code modification to see how it has affected the overall performance.

In general, if you have a load/traffic estimate for your product (either determined by your team or supplied by a client), performance testing can also help determine whether the system meets the performance requirements and can handle the load properly.

Some of the system properties monitored during performance testing include system throughput, processing time, and response time.

Load and Stress testing described below are different subsets of Performance testing. Each has a distinct goal and is of critical value to any piece of software.

Load testing

As mentioned, load testing is a subcategory of performance testing. And, like the rest of the subtypes, it is critical enough to deserve its own section. It has a slightly more niche goal and process than that of the broad performance testing.

The load testing process includes simulating different user load scenarios to check system behavior under low, normal, and high loads. The main goal of this testing is to determine the upper load limit of a system. For an application, it is usually the case that it is tested against expected user load, with a goal of handling load 20%-50% higher than the expected load.

Load testing is usually performed closer to the end of a project when its functional development is fully or almost complete.

Stress testing

Similar to load testing, stress testing is a subset of performance testing. In contrast with load testing, its main goal is to determine the reliability, robustness, and recoverability of a system by putting the system under high loads.

In particular, stress testing is used to determine the highest load a system can take, and levels of stress which bring the system down. Stress testing is extremely important for software applications, especially for its critical functionality. It helps investigate how a system behaves under high traffic/load, when it crashes, and, importantly, how it recovers from those crashes.

Special cases of stress testing involve checking whether data after a crash is resolved correctly and whether system security is compromised under high stress or after a failure.

Usability testing

Usability testing is a software evaluation process, achieved by testing it with real users. Ultimately, usability might be a deal-breaker, even if your application is a perfect, bug-free, and functional piece of software.

Usually, the process involves users completing a set of tasks, and researchers observing them and taking notes. The overall test can include both quantitative and qualitative data and is conducted in a more research-like manner. Formal processes for such research activities imply having a study plan, methods (study design, hypothesis), and materials (questionnaires, surveys, etc.). Such testing can be performed in and out of lab settings and might require a lot of time to prepare.

Usability testing can shun light on the way users perceive your application, how long it takes for them to complete tasks, and how satisfied they are with the functionality of the software. It is important to note that the biggest obstacles within such tests are the bias and expectations of the researcher. Usability testing is, therefore, the more subjective testing method, and is quite hard to organize correctly. Experience UX does a very good job at explaining further Usability Testing, provides free resources, as well as services to do it well.

Request a demo to get the best out of the usability testing with crowdsourced testing solutions.

Security testing

As with most testing types, the name of security testing already explains its goal. To elaborate further on it, we can mention that this testing aims to determine data communication and protection levels, as well as to uncover system vulnerabilities.

Engineers usually program deliberate attacks on the system, observe how it performs under them, and adjust appropriately to comply with security requirements.

Not only does it help discover threats and vulnerabilities in the tested application, but it also helps identify potential risks in the system and its exploitative elements, which could be solved via adjusting the implementation before a product is launched.

You can see how security testing can become quite a daunting and enormous testing category. Therefore, it is usually thought of as a general category, which includes subcategories such as security and vulnerability scanning, risk assessment, and ethical hacking.

Like in Usability Testing, security tests are performed after the majority of product functionality is implemented.

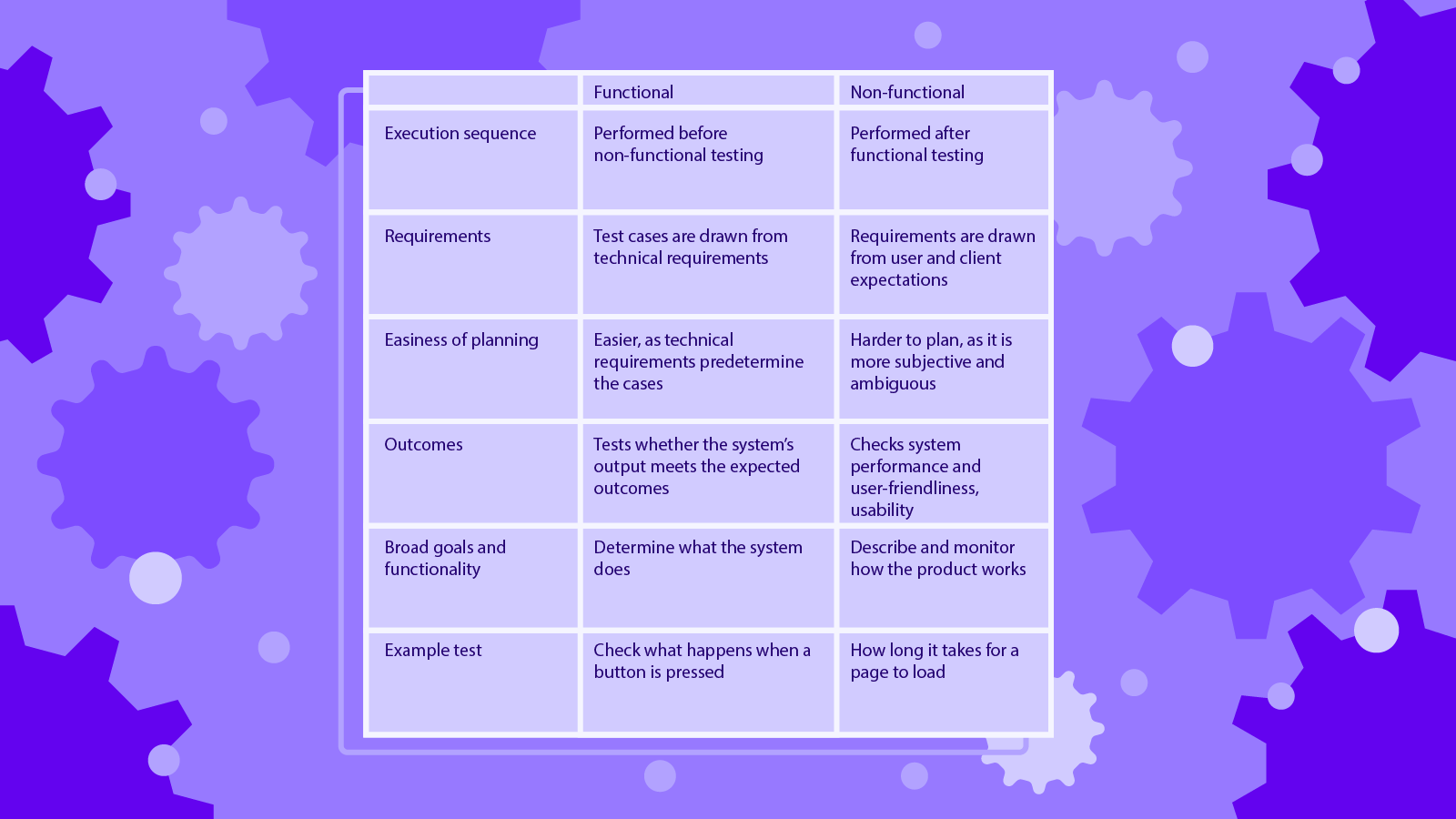

Functional vs non-functional testing

The main difference between these testing types, indicated by the names, is that functional testing verifies the functional requirements, and the rest of the requirements are checked via non-functional testing.

As per functional requirements, the testing is focused on the expected behavior of the system. After verifying the functionality, the system then can be tested on non-functional metrics, such as performance and usability.

It is therefore strongly recommended that functional testing is done prior to any non-functional testing.

When testing software, a technical specification document can serve as a guide to functional testing, which makes it easier to determine the test cases and expected outcomes.

As for non-functional testing, there is more ambiguity on how the system should perform. For some types of testing, such as performance testing, there might be an estimation in terms of expected load/traffic. However, it is still an estimation and not a precise number for which developers should aim.

Especially when it comes to opinions of users, e.g., in usability testing, the line between expected and actual performance becomes even more obscure and hard to aim for.

Closing

In this article, we explored the two most general categories of testing - functional and non-functional testing, by giving definitions and examples of each.

While both of the testing types give tremendous value to the product requirement verification, we have also outlined their differences at the end of the article.